Executives love to call AI a “black box.” The term shows up in boardrooms, risk reports, and industry conferences. The assumption is simple: data goes in, results come out, and no one can explain what happens in between.

But here’s the reality: AI doesn’t have to be a black box.

It only feels that way when vendors hide behind complexity or avoid showing how their systems work.

Gartner predicts that by 2026, 60% of large enterprises will adopt AI governance tools focused on explainability and accountability. That shift is happening because enterprises no longer accept “just trust the model” as an answer.

If an AI vendor can’t explain how a decision was made, it’s not the AI that’s opaque, it’s the vendor.

For multinational enterprises, AI is now embedded into critical workflows. From invoice validation and contract review to HR screening and compliance reporting, automation directly affects financial accuracy and regulatory standing.

One misclassified invoice could mean paying the wrong vendor. A wrongly flagged compliance report could trigger an audit. A rejected employee application might expose bias.

This is where explainable AI (XAI) becomes essential. Transparency isn’t just a technical nice-to-have. It’s a requirement for trust.

According to PwC, enterprises that adopt explainable AI see 20–30% faster internal adoption rates because employees actually trust the outputs. Without explainability, users often double-check results, which wipes out the efficiency gains AI is supposed to bring.

Running AI systems without transparency creates risks that go far beyond IT.

An Accenture study found that 76% of executives struggle to trust AI systems because they cannot explain the outputs. That distrust directly impacts adoption and ROI.

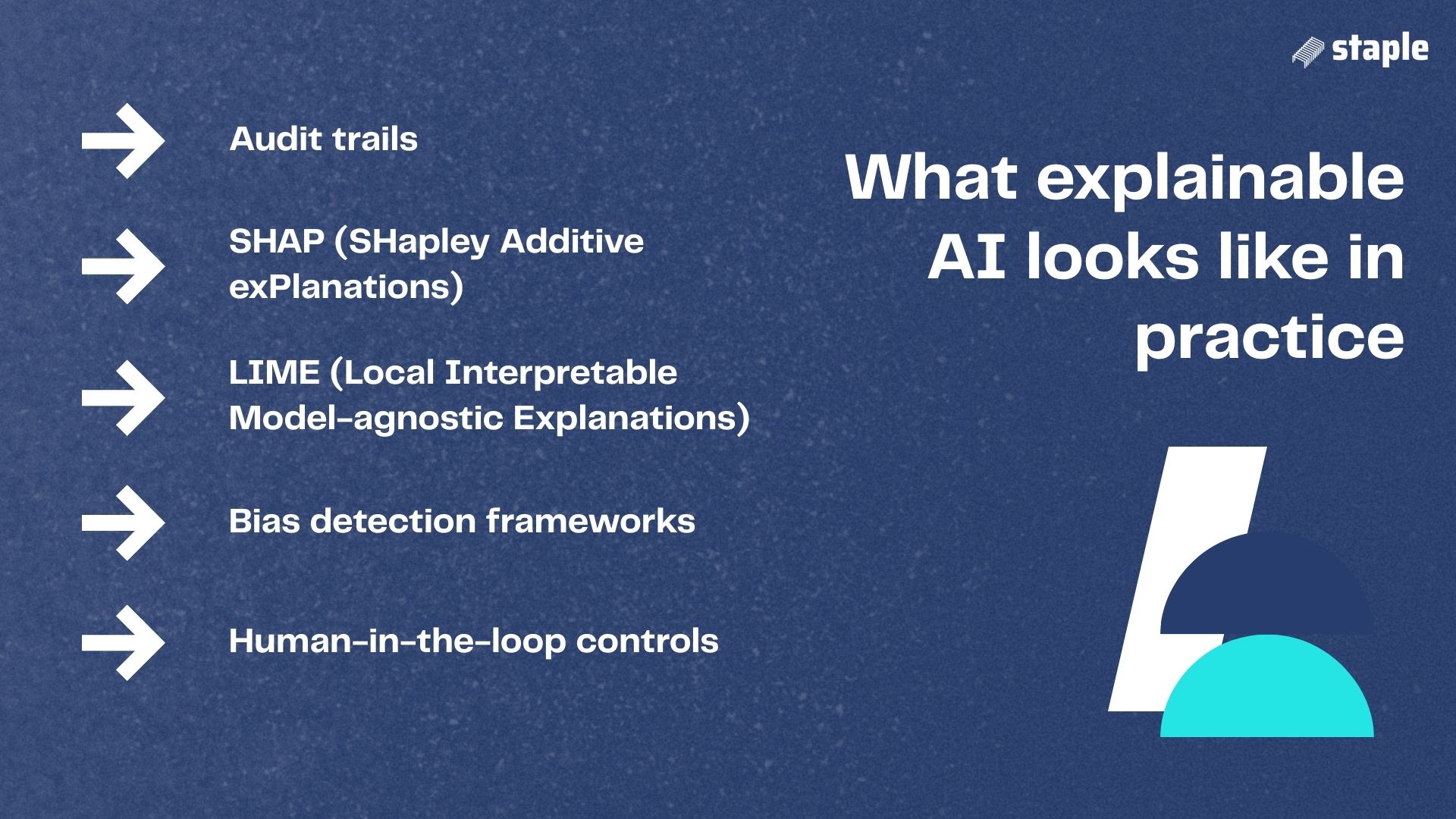

Enterprises don’t need to settle for mystery. Several methods and tools exist today to make AI interpretable:

These aren’t academic concepts. They’re practical tools enterprises can use today to reduce black box risks and improve trust.

If explainability is so useful, why do some vendors avoid it?

Whenever a vendor says, “It’s AI, just trust it,” it’s worth asking: trust what, exactly?

A black box doesn’t just create risk. It creates measurable cost.

In short: opacity is expensive.

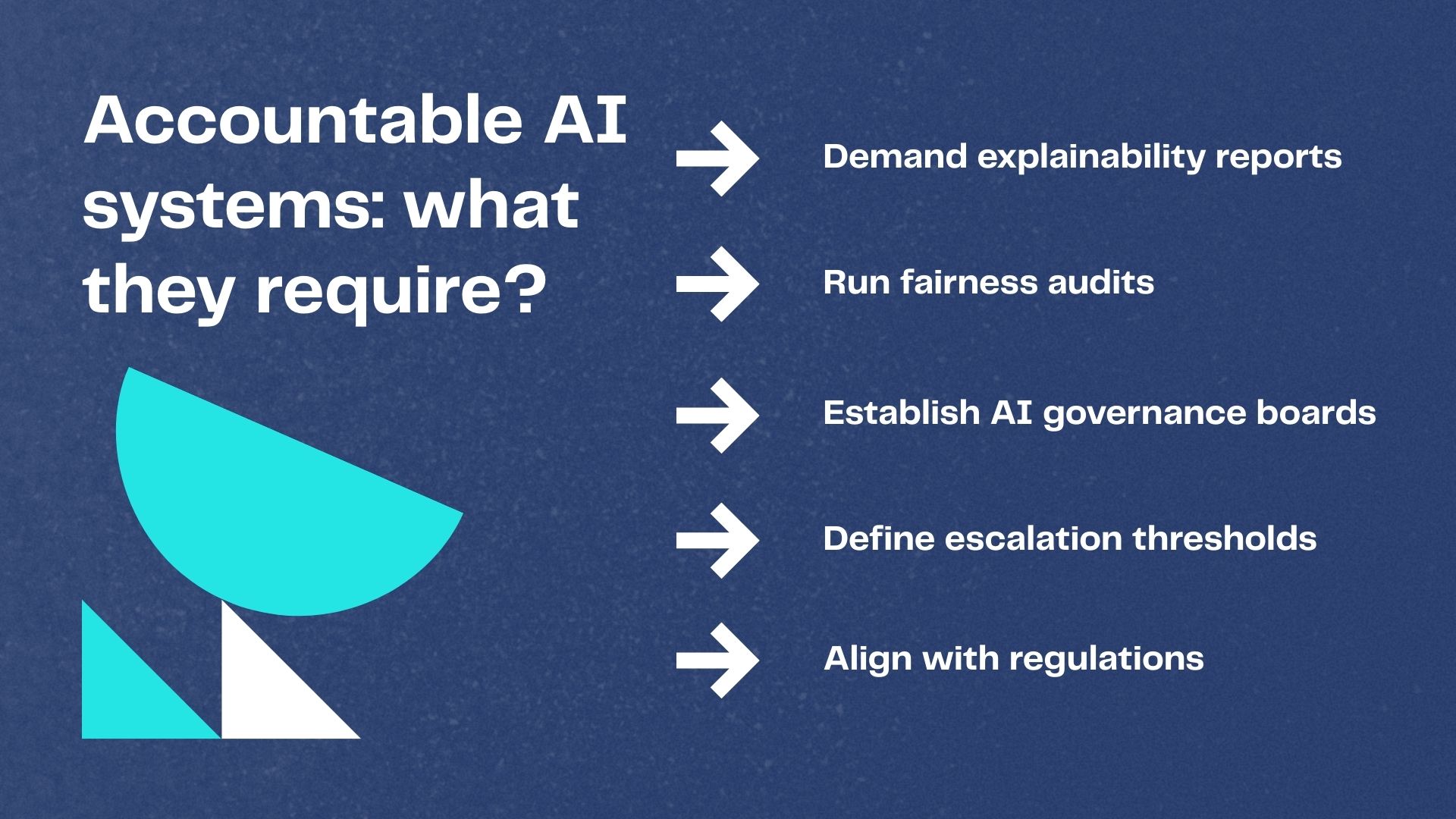

Enterprises building accountable AI systems must go beyond technology. Accountability requires governance, policy, and shared responsibility.

Practical steps include:

The World Economic Forum’s 2023 Global AI Report found that only 20% of enterprises audit AI systems regularly. That leaves the majority vulnerable to both compliance penalties and trust breakdowns.

Trust doesn’t come from complexity. It comes from clarity. Enterprises should focus on three elements:

When these conditions are met, adoption accelerates. Business users stop treating AI as a black box and start using it as a partner.

McKinsey research shows that enterprises with explainable, trustworthy AI systems realize 30–50% higher ROI on automation projects compared to those with opaque systems.

These examples show that explainability is not an academic exercise, it directly impacts enterprise operations.

The phrase “AI is a black box” is often used as a shield. It shifts responsibility away from the vendor and discourages enterprises from asking tough questions. But the reality is:

The only reason an enterprise faces a black box is because someone usually the vendor wants to keep it that way.

The future of enterprise AI is not opaque. It’s transparent, auditable, and explainable.

To get there, enterprises should:

The enterprises that demand transparency will not only stay compliant, they will also build greater trust with employees, regulators, and customers.

Most vendors talk about transparency but still leave enterprises stuck with black box AI risk. They deliver results without showing the “how” behind them, or worse, they hide human intervention in the background. That’s exactly what destroys enterprise AI trust.

Staple AI takes a different path. It was built to avoid the black box trap entirely. Instead of vague promises, it embeds explainable AI into every workflow.

Here’s what that looks like:

For finance and operations leaders, this means decisions aren’t just fast. They’re explainable, auditable, and compliant. And when explainability is clear, adoption accelerates. Teams stop second-guessing outputs and start trusting them.

In short, Staple AI helps enterprises move from questioning “what’s inside the box?” to confidently building systems where transparency, compliance, and enterprise AI trust are non-negotiable.

For finance and operations teams, this means every decision is not only fast, but also understandable. Enterprises get automation they can trust accountable AI systems that meet both business needs and regulatory standards.

1. What is explainable AI?

Explainable AI (XAI) refers to techniques that make AI outputs transparent and interpretable for humans.

2. Why is black box AI a risk for enterprises?

It hides decision-making, making compliance, auditing, and trust nearly impossible.

3. What are AI transparency tools?

Tools like SHAP, LIME, bias detection frameworks, and transparency dashboards.

4. How does AI model explainability work?

It highlights which input factors influenced a model’s decision, making outputs interpretable.

5. Why do some vendors avoid explainability?

To hide limitations, errors, or hidden manual intervention.

6. What is an accountable AI system?

A system with governance, audit trails, and explainability built in.

7. How does explainability improve enterprise AI trust?

It gives users clarity and confidence in outputs, speeding adoption.

8. Is explainable AI required by law?

Yes, in some regions. GDPR and the EU AI Act mandate explainability in certain cases.

9. Can explainability slow down AI?

No. Most tools work alongside AI without reducing performance.

10. How does Staple AI handle explainability?

By providing full audit trails and transparent decision-making for every document processed.

Reach out to us: