A few months ago, a CFO friend from a large multinational confided in me. He found out that one of his junior analysts had been using ChatGPT to prepare parts of the company’s quarterly board presentation. At first, it sounded harmless. Everyone wants to save time, right? But then came the catch the analyst had pasted raw financial data into the chatbot, thinking it was a private space.

Turns out, it wasn’t. The data left the company perimeter. And suddenly, what started as a shortcut became a shadow AI compliance risk with potential regulatory fallout.

I’ve seen versions of this story pop up everywhere. Employees experimenting with AI tools on the side. Teams “testing” models without telling IT. Ops staff pasting sensitive data into browser plugins because it saves an hour. These are hidden AI systems at work, and they’re spreading faster than most compliance teams can track.

Now, if you’re in finance or operations at a multinational, ask yourself: would you even know if this was happening in your company?

I don’t mean the officially approved pilots your IT team rolled out. I mean the unapproved AI tools quietly being used in spreadsheets, reports, and chats.

If you don’t have an answer, you’re not alone. A survey earlier this year showed that 55% of workers globally use unapproved AI tools at work, and 40% admit using them even when explicitly banned (TechRadar, 2025). That’s a compliance nightmare in the making.

We’ve been here before. Shadow IT was the buzzword in the 2010s employees downloading Dropbox, Slack, or Trello before IT had policies in place. It caused governance headaches, but at least those tools were visible. You could monitor network traffic and spot usage.

Shadow AI is different. These are hidden AI systems that don’t just store data they process it, learn from it, and sometimes even retain it. When an employee pastes PII, financial projections, or confidential RFP data into a free AI chatbot, it leaves your compliance perimeter. You can’t undo that.

Here’s the kicker: a recent Axios report found some firms discovered 67 different AI tools being used inside their orgs, 90% of them unlicensed. Think about that. If you’re leading finance or ops, how do you even start mapping that risk?

I get it you probably think, “This is IT’s problem.” But here’s the hard truth: compliance fines don’t stop at IT’s desk. They land squarely in finance and operations.

That means if enterprise AI governance isn’t in place, your team could be dealing with penalties, audits, and a damaged reputation.

Note: It’s not just about penalties. Imagine losing a key client because your company’s name appeared in the press for an AI compliance mishap.

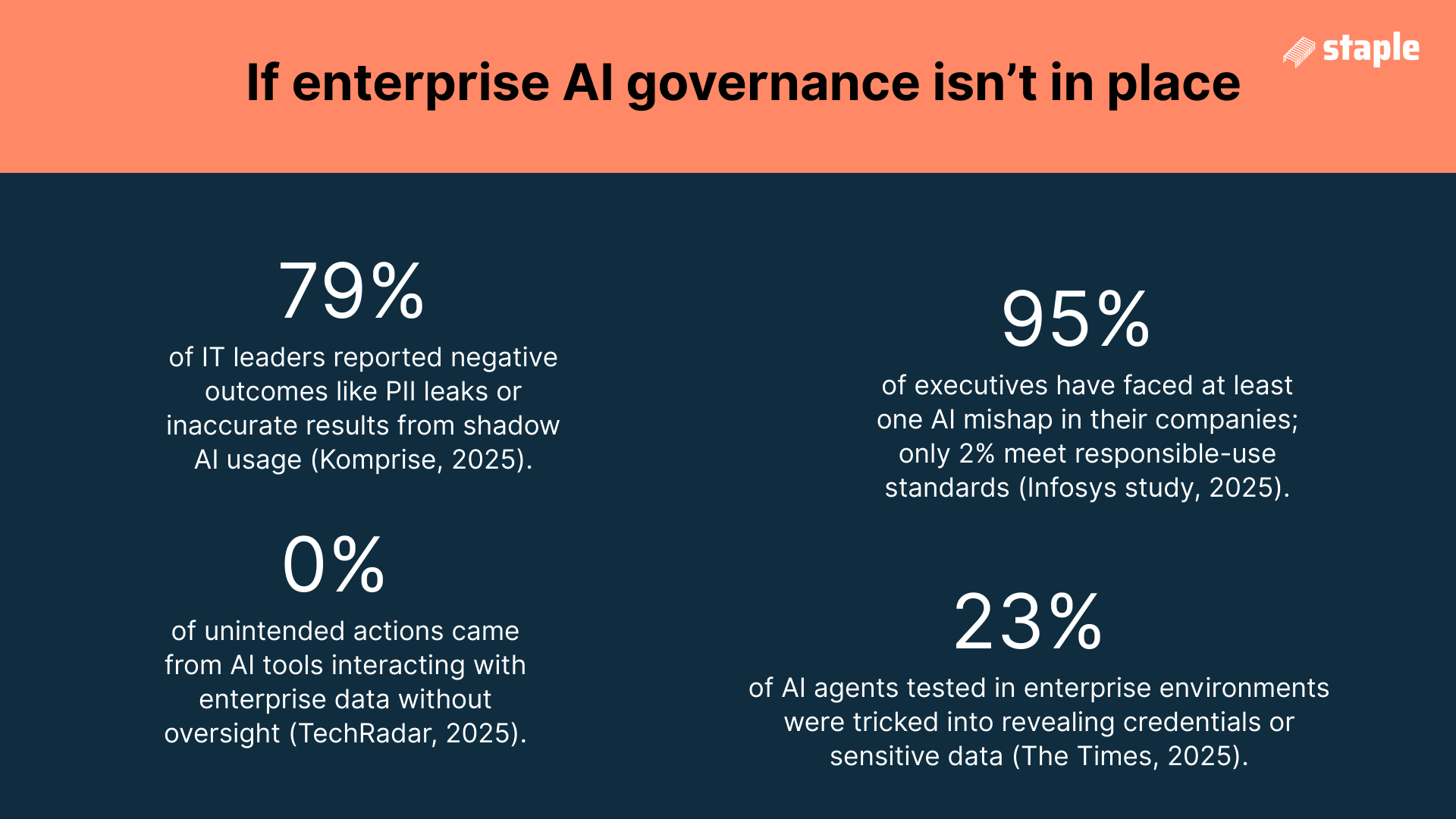

Let me throw some numbers your way because this isn’t just theory:

In my experience, these stats don’t even tell the full story. The real danger is that most of these incidents go undetected until much later usually when auditors or regulators come knocking. And by then, it’s too damn late.

Everyone talks about AI risk management like it’s a checklist. Draft a policy. Train employees. Done. But that’s bullshit. Policies don’t stop someone from copying and pasting into an AI tool at 11 PM the night before a deadline.

What actually works is visibility. You need to know:

Without that, you’re driving blind.

Expert Opinion: Shadow AI is not just a security problem it’s a governance blind spot. Finance and operations leaders must demand transparency because the financial exposure lands on their desks, not just IT’s.

Let’s talk about regulatory issues in AI for a second. Most enterprises today operate in multiple jurisdictions. A European subsidiary may be under GDPR. A U.S. team might handle HIPAA-regulated healthcare data. An Asia-Pacific operation could fall under Singapore’s PDPA or Australia’s Privacy Act.

Now picture employees in each region using different unapproved AI tools. Some may store data in servers outside your control. Others may not have any compliance certifications.

That’s how you end up with fragmented liability. One breach, and suddenly you’re explaining to regulators across three continents why your AI governance was asleep at the wheel.

This is where I want to pause and ask what’s your role here? You’re not configuring firewalls, but you are responsible for process integrity. You manage data flows. You own vendor compliance.

Finance and ops teams should:

When I’ve worked with teams who ignored these steps, the damage was ugly. Think manual remediation of thousands of documents. Regulatory reporting. Even lawsuits.

I know some leaders who tried the “ban AI” approach. Block ChatGPT. Disable API calls. Issue memos.

Here’s my take: it doesn’t work. Employees are resourceful. If an AI tool saves them hours, they’ll find a way. Blocking just drives AI deeper into the shadows.

Instead, the answer lies in AI risk management that balances innovation with compliance. Give employees approved tools. Monitor usage. Set guardrails. And build enterprise AI governance that’s flexible enough to evolve with regulations.

I’ll be blunt. The thing that scares me most about shadow AI isn’t just compliance fines. It’s the false sense of security leaders have. Many think: “We have an AI policy. We’re fine.”

But when I ask, “Do you know how many unapproved AI tools are in use at your org right now?” most can’t answer. That’s the silence before the storm.

Here’s how it tackles the shadow AI compliance headache:

Now, here’s the part I think is most relevant when we talk about shadow AI compliance risk: Staple’s Trust Layer.

When employees use unapproved AI tools, you lose visibility. You don’t know where data went, how it was processed, or whether it can be trusted in an audit. Staple flips that by turning the black box into a glass box.

In short, Staple AI doesn’t just “process” data it turns it into trusted, verifiable, explainable, and compliant data. Which, if you ask me, is the exact opposite of the mess Shadow AI creates with hidden AI systems.

When I saw this in action, it reminded me of moving from messy spreadsheets to a clean audit-ready ledger. For finance and operations, it’s like finally turning the lights on in a room you’ve been stumbling through blind.

1. What is shadow AI?

Shadow AI refers to employees using unapproved or hidden AI systems without IT or compliance oversight.

2. Why is shadow AI a compliance risk?

Because data can leak into external AI models, leading to violations of GDPR, HIPAA, CCPA, or other regulations.

3. How common is shadow AI in enterprises?

Studies show 55% of workers use unapproved AI tools, and 40% use them even when banned.

4. What data is at risk from unapproved AI tools?

Confidential financial reports, personal data (PII), contracts, and operational documents.

5. What are the regulatory issues in AI use?

Key regulations include GDPR, HIPAA, PCI DSS, CCPA, and regional privacy laws like Singapore PDPA or Australia Privacy Act.

6. How do I detect shadow AI in my company?

Through audits, network monitoring, and tools designed to track enterprise AI usage.

7. What is enterprise AI governance?

A framework that sets rules, monitoring, and accountability for AI usage across an enterprise.

8. Should companies ban AI tools to reduce compliance risk?

Banning doesn’t work employees find workarounds. The better approach is governance and approved tools.

9. How do finance and operations teams play a role in AI risk management?

They oversee data flows, vendor compliance, and must ensure sensitive information isn’t mishandled.

10. How can Staple AI help with shadow AI compliance risk?

By making AI usage transparent, logging every interaction, and providing audit-ready trails for compliance teams.

Reach out to us: