.jpg)

I heard this from a senior consultant working with a global construction and infrastructure company. They’d just deployed an AI bot to handle invoice management automation across regions. Everything looked good on paper. But then one vendor’s payment routing was disrupted. The AI routed invoices to the wrong country office. It led to duplicate payments, customs penalties, and total confusion in the finance team.

The clean, automated workflow had hidden a $500,000 mistake. Took them weeks to untangle the mess.

That’s when I started asking: Is AI automation always automation when it seems right? For enterprise teams in finance or operations, automation isn’t magic. It can mask real risk. And sometimes those risks are part of broader enterprise AI risks that don't show up in demos or dashboards.

AI isn’t intelligent. It is based on data. If that data is stale, incomplete, or siloed, you’re throwing mud at the decision engine.

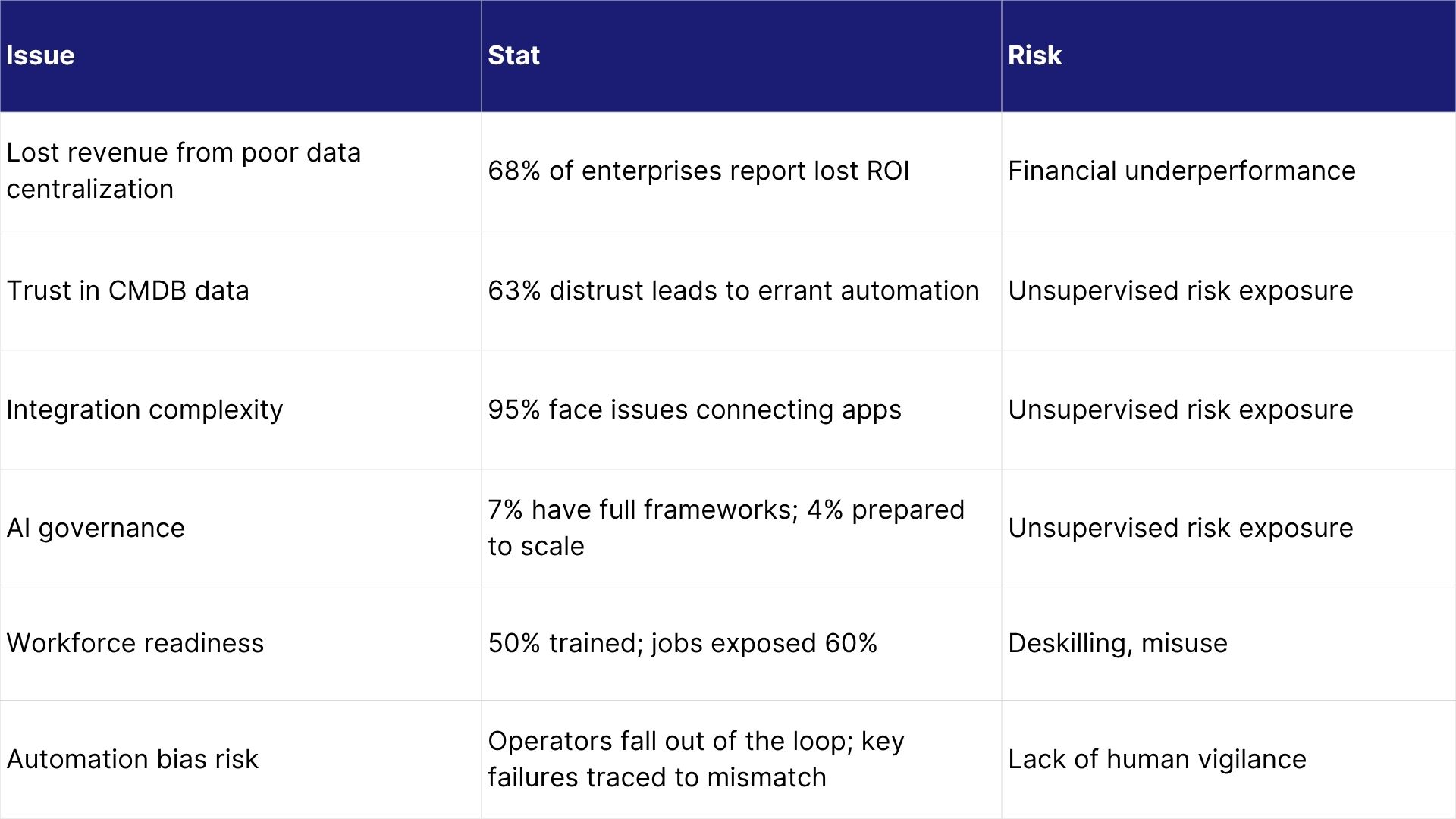

• One enterprise survey showed 68% face lost revenue because data isn’t fully centralized.

• Gartner reports that 63% of businesses don’t trust their CMDB data, meaning automation built on it may be unreliable.

Without real-time, accurate integration across ERP, CRM, and finance, AI might make incorrect cloud decisions, routing errors, and even compliance misfires. That’s one of the most overlooked AI automation pitfalls.

Too much trust in automation leads to neglect of automation bias.

Humans stop monitoring, just assume AI got it. That’s the “out-of-the-loop” syndrome.

Quick example: you audit bots’ decisions once, then forget. Six months later, systems changed, but human operators are clueless.

This is how hidden flaws in AI systems creep slowly, and without much noise.

LLMs and agents can’t explain why they made decisions.

In banking or legal workflows, that’s a problem. You need traceability. Otherwise, you’re exposed to bias, regulatory scrutiny, or plain wrong decisions. A lack of AI vendor transparency can be the root cause.

93% of enterprises use AI, but only 7% have fully embedded governance frameworks, per one report.

Add unauthorized tools employees bring in“shadow AI ”and you’ve got blind spots across security, compliance, and workflow. These are real automation risks in business, not theoretical what-ifs.

AI roles threaten autonomy and can cause deskilling or stress. In advanced economies, nearly 60% of jobs may be AI-exposed—about 19% of U.S. workers are already in highly exposed roles.

Meanwhile, 93% of U.S. workplaces have adopted AI, but only about 50% of workers have received any training. That mismatch leads to misuse or mistrust of automation. Training must include awareness of AI automation pitfalls and how to operate a tool.

Reuters warns AI agents bring greater autonomy and thus greater legal, privacy, bias, and safety risks.

Even Geoffrey Hinton, a leading AI expert, says many tech leaders downplay these risks, except a few like DeepMind’s Demis Hassabis.

That's a red flag when global legal, finance, or operations teams rely on these systems. The demand for enterprise AI compliance has never been higher.

Keep people in the loop

Don't set-and-forget. You need regular audit gates.

Monitor outputs, retrain models, validate edge cases, even if it seems “automated.”

Clean, connected, real-time

Centralize and sync transactional data across systems, CRM, ERP, and finance.

Ensure high-quality pipelines to avoid drift. In one report, 41% companies said real-time issues block AI success.

Use self-healing governance to fix inconsistencies as they appear. And yes, this is key to managing AI automation pitfalls.

Build frameworks early

Start governance before deployment as an afterthought. Only about 8% embed AI controls in the dev lifecycle.

Map all AI tools authorized or shadowed and manage prompt injection and data flow risks.

Ensure transparency in decision logic, particularly for finance, compliance, and contracts and agreements automation decisions.

Bias and privacy audits routinely. It’s one way to increase AI vendor transparency and reduce enterprise AI risks.

Train staff on how AI works, what it can’t do, and when to intervene.

Engage legal, risk, and ops teams early. Avoid finger-pointing later.

At Staple AI, we focus on data readiness and continuous oversight. Our AI models integrate with enterprise data pipelines, enforce governance rules in real time, and keep humans “in the loop” so that logic is transparent, traceable, and auditable. I’ve used it in real rollouts, and seeing dashboards highlight drift before it hits finance forecasts that gave me actual peace of mind.

Staple AI doesn’t just automate transform automation into something verifiable. Most automation tools work like black boxes. You feed data in, and they spit something out. But how do you trace the decision? How do you verify what it used, ignored, or inferred?

Staple’s trust layer changes the game. It validates every field, maps every model decision, and logs the complete data lineage. There’s built-in compliance, with support for ISO standards, audit trails, and even tax regulation validation in 300+ languages. So, whether your data is coming from an ERP or a stamped delivery note, the AI doesn’t just extract it; it explains.

That means fewer silent failures. Fewer auditors are raising eyebrows. And way more confidence when you say, “Yes, this came from the original document, here’s how we know.”

For global finance and ops teams juggling formats, rules, and jurisdictions, this kind of clarity isn’t nice to have. It’s survival.

So, if automation already powers your workflows, but trust in the results still feels shaky, Staple AI is what makes it trustworthy, explainable, and ready for enterprise scale.

If you're managing AI in finance or ops at a multinational, this stuff matters. Because what looks like frictionless automation can hide huge cost, compliance, or brand risk. When automation isn’t what it seems, blind trust can tank budgets or erode credibility. That’s the danger of ignoring automation risks in business.

Reach out to us: